Power Up! Winner: AI to improve developer experience

Frequent readers of VIA’s blog will know that twice a year we invest several days into “Power Ups!” These are days where 100% of VIAneers to stop bumping their heads on their day-to-day tasks and think about ways we can improve the way we work.

This year, we focused on artificial intelligence (AI). VIAneers self-selected into 13 teams and came up with ideas to leverage AI. Ideas included everything from ethical guidelines for AI, and how to generate more blog content, to how to use AI to support Kubernetes deployments.

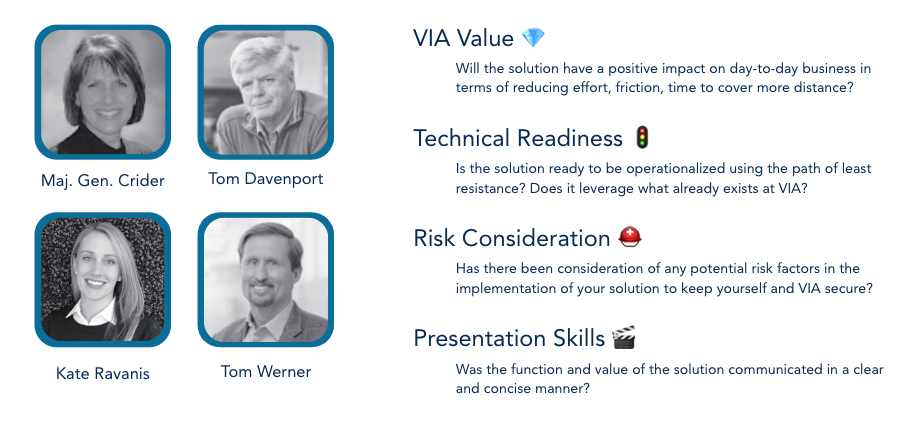

This Power Up! we upped the stakes and had the teams compete for a $1,000 USD prize to be spent on anything that they would like. We were privileged to have Major General Kim Crider (ret) former Chief Technology Innovation Officer of the United States Space Force, Tom Davenport, author of more than 11 books on analytics and AI, and Tom Werner, former CEO and Chairman of SunPower join VIA’s COO, Kate Ravanis as our panel of judges to select the winner.

Winners were judged based on four criteria: VIA Value, Technical Readiness, Risk Consideration, Presentation Skills.

All of our VIAneers did an incredible job of not just finding great ways to leverage AI but also implementing them in the 2.5 day Power Up! time limit.

But, there could only be one winner.

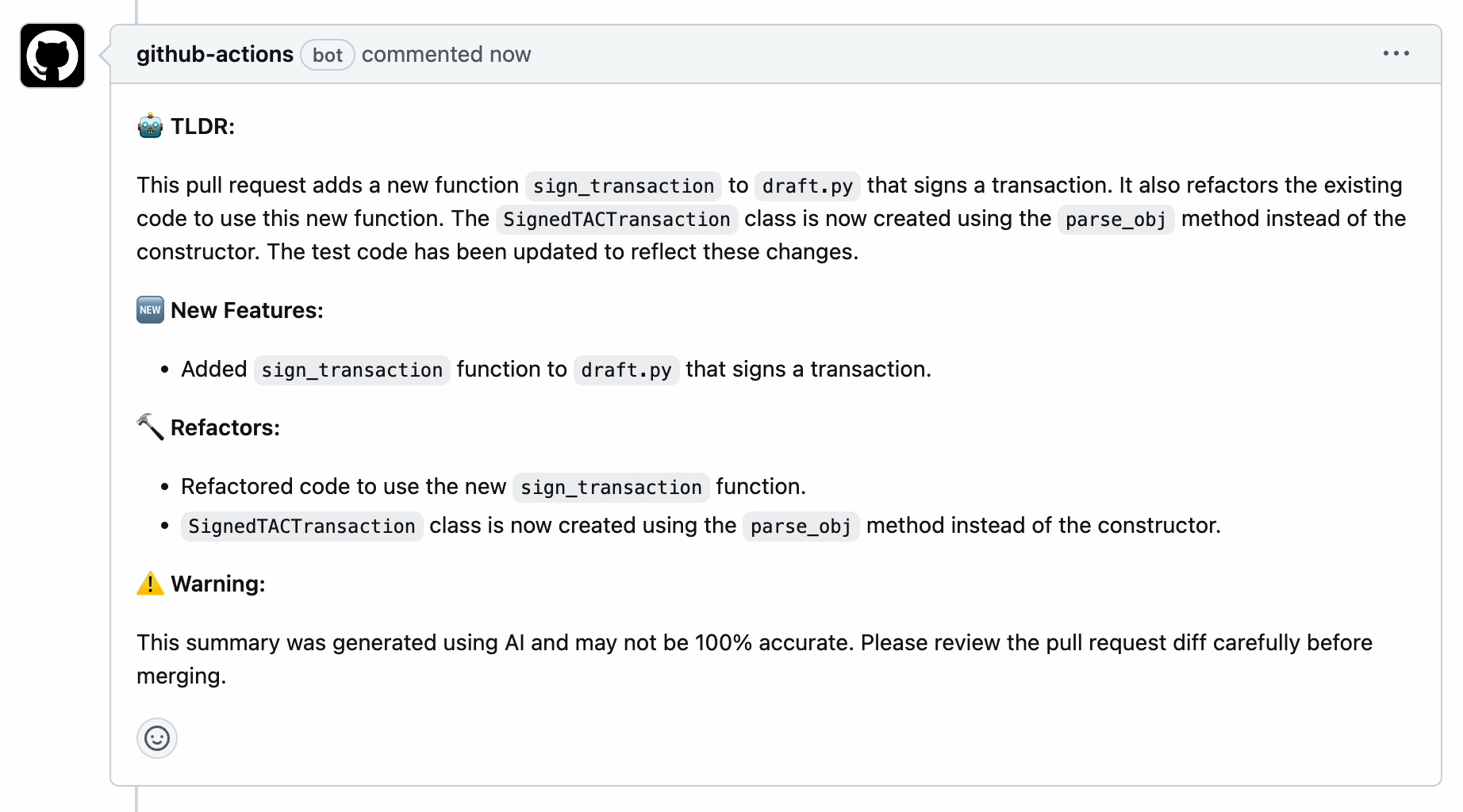

The team that won was the TL;DR team. As readers know, we love a good pull request (feedback on developer code changes). While we have a whole language system about how to provide feedback on pull requests, TL;DR team figured out how AI could be used to help write a summary of the pull requests to aid reviewers.

“We found that all the teams had done an exceptional job generating ideas and taking a first pass at articulating the benefits and the implementation plans for their AI initiatives. What the judges thought made TL;DR stand apart was that the team had actually implemented their solution and reviewed internal data to quantify a savings of more than $250,000 per year with no additional costs. The fact that they had also thought through how to make the AI generated summaries reflect VIA’s culture and values was a special bonus from my perspective.” said Kate Ravanis, VIA’s COO.

As the video below shows, the TL;DR team’s AI generates summaries of pull requests to speed up the code review process.

Here are a few examples of how the TL;DR team’s project excelled against the evaluation criteria in just a few short days:

- VIA Value – The team requested internal data and was able to quantify the expected value (over $250,000 per year) and provided a spreadsheet to show their work.

- Technical Readiness – The code was working and demonstrated on a current VIA repo using VIA’s existing infrastructure. Bonus points that the AI model was directed to generate text that reflected VIA values and even use emojis.

- Risk Consideration – The team evaluated external solutions but ultimately implemented an in-house solution to protect VIA’s intellectual property. A thoughtful addition is to add a warning to each response that it is generated by AI and may not be 100% accurate.

- Presentation Skills – Every team had a 5 minute limit and TL;DR did a great job of summarizing their solution clearly and concisely.

Less than two weeks later, this solution is already live and in use today with VIA developers.

Consistent with our long-standing commitment to improving developer communities everywhere, after further testing at VIA we will open source this project and make it available on GitHub.

–

–  –

–